Startup Stories: Will Story Squad Beat Google Vision?

The Problems with Handwriting OCRs and Our Solution

StorySquad (Now Scribble Stadium) is a “gaming” platform that turns your child into a reader, writer, and illustrator. It encourages creation and is an alternative to Fortnite and YouTube that children enjoy and parents trust. Each week we send our users a short story to read. Then kids write their own story based on the characters they just read about and draw an illustration (on actual paper to reduce screen time and encourage sustained focus). Then the child can upload pictures of their work to our platform where we assign a readability score that helps the child get better at writing over time!

We also maintain the largest dataset of children's handwriting on the internet. I’m Jack Ross and I am a data scientist that helped build this platform.

I joined StorySquad along with a cross functional team of 6 engineers through the Labs portion of Lambda School. (Now Bloom Tech)

The Mission

As a data scientist, the problems we would have to solve to make this platform a reality was clear.

Task 1: API

Setup an API endpoint that can receive images (as Amazon S3 Bucket URLs) and returns a complexity/readability score based on the images received.

Task 2: OCR

Build a handwriting OCR (optical character recognition) model that can extract the text from a users handwritten short story.

Task 3: Complexity and Readability Score

Implement a metric (or series of metrics) that measures the readability and complexity of user generated content (short stories) and return it to the child so they can get better at reading and writing over time.

Tech Stack

API

For the data science API we chose to use FastAPI.

(FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.6+ based on standard Python type hints.) We chose FastAPI for the data science API because of its automatic documentation features and ease of use. This will allow us to prototype fast and act as if we were in production while automatically building out documentation which the next generation of Story Squad engineers will use.

Handwriting OCR

To have a reliable OCR, you need good data. The first step I took in building the OCR was to setup an image preprocessing pipeline with OpenCV.

This pipeline is used to standardize the DPI (dots per inch) of an image, increase the contrast to bring out important features, binarize the image, reduce the amount of noise, and deskew the image so lines in a paragraph could be more easily deciphered.

To extract the text from the preprocessed image, I used Python-tesseract, a wrapper for Google’s Tesseract-OCR Engine. Python-tesseract is an optical character recognition (OCR) tool for python. That is, it will recognize and “read” the text embedded in images. This is what enables our high accuracy.

Complexity and Readability Score

TextStat was the tool of choice for calculating a reliable readability/complexity score. TextStat is a Python package to calculate statistics from text to determine readability, complexity and grade level of a particular corpus. I use this package to return the Flesch-Kincaid Grade of the given user submitted text. This is a grade formula in that a score of 9.3 means that a ninth grader would be able to read the document.

Update: Recently I removed TextStat as our scoring library of choice, instead opting to implement the raw Flesch-Kincaid statistic to cut down on time complexity/runtime and to avoid possible errors caused by future library updates.

The Problem with Children's Handwriting and OCRs

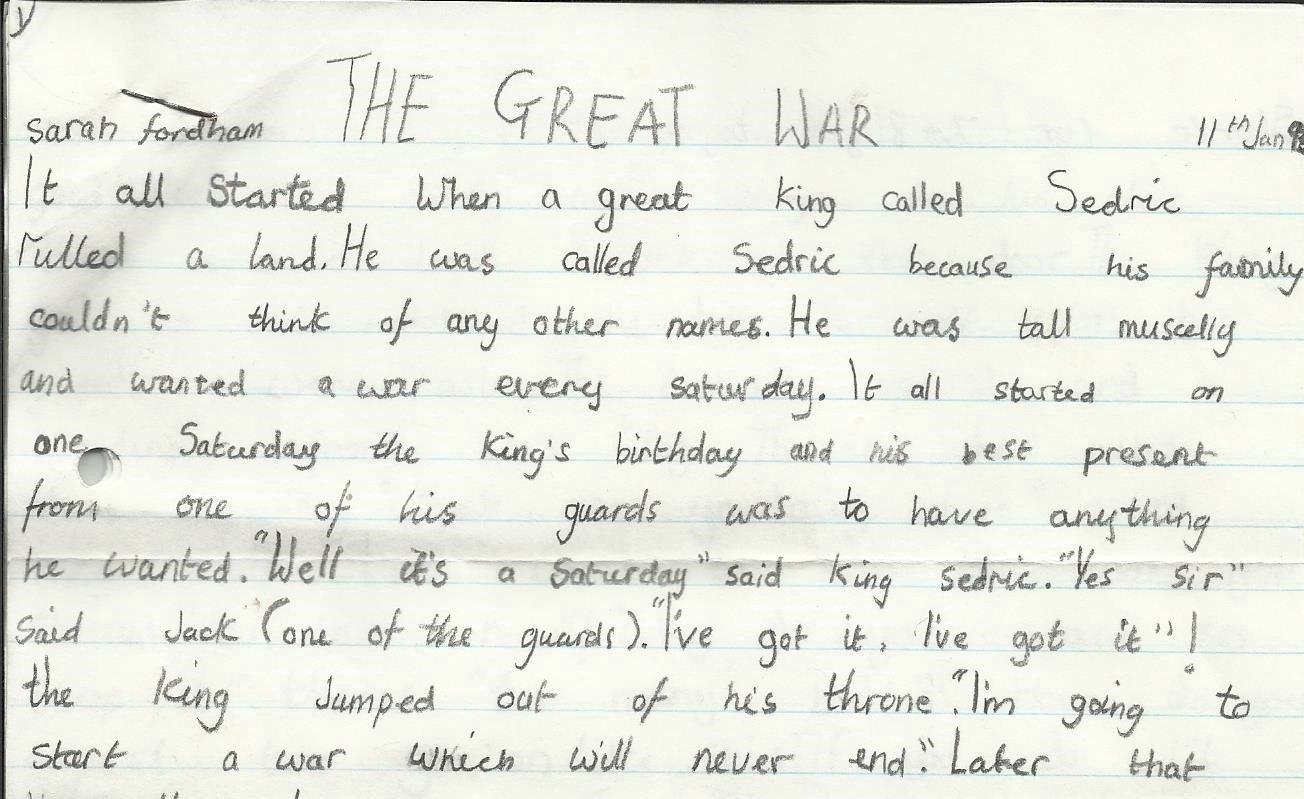

The best OCRs today (Google Vision, Amazon Rekognition) do a pretty good job of translating most handwritten documents into text. Our biggest issue with implementing Story Squads OCR Engine was that even in state-of-the-art recognition systems, the lack of neatness in a child’s handwriting gave such a large amount of errors that we were unable to use that data to generate a reliable complexity score. (Test with Google Vision below)

Google Vision results on child’s handwriting

We were able to partially overcome the problem of detecting children's handwriting by creating the image processing pipeline (OpenCV) discussed in the section above. By optimizing the image for an OCR, we were able to achieve a much higher accuracy. (Character level) Using this preprocessed image data on a custom pytesseract OCR model trained on fonts created from children's handwriting, we attained an accuracy high enough to output reliable readability/complexity scores (grade level) based on user submissions.

Before Image Processing

After Image Processing

Flesch-Kincaid Grade Level Score: 5.1 (5th grade writing level)

Story Squad: Where We Are

Currently we have shipped all of the features discussed above. Our next goal is to retrain the custom Tesseract model on a critical mass of data and explore new preprocessing techniques to boost the character level accuracy of that model. I have also begun implementing a Post-Processing Pipeline in which the output of our Tesseract model would be fed through a language model performing autocorrection. The engineering team at Story Squad is also in talks about switching our platform to Rust, but that’s worth it’s own article.

Reflections

Story Squad and Lambda Labs has been such an incredible experience so far and I’ve learned so much in the past few weeks about computer vision, machine learning, and working with a team. Building a product with a cross functional team has been a highlight. I’ve picked up a myriad of different Front End, Back End, and even GitHub tips. Plus I’ve made some incredible friends along the way.

I hope to see Story Squad used at homes and possibly even at schools around the country to inspire the next generation of creators. Technical challenges to make this a reality will include achieving a never before seen accuracy in handwritten text of children but this is a challenge that I can’t wait to try and solve.

I’ve also grown a lot as a person and developer over the duration of my time at Story Squad. Writing and receiving peer feedback has given me new insights into how I work that are already allowing me to communicate with my team more effectively. One day I would like to become a Autonomous Vehicle Engineer and the computer vision and machine learning skills that I’ve picked up over the course of this project will be integral to hitting that goal and getting a step closer to helping the planet on a massive scale.

If you’ve made it this far, thank you for reading.